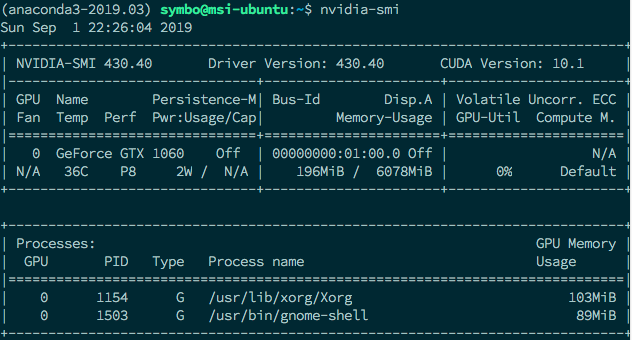

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

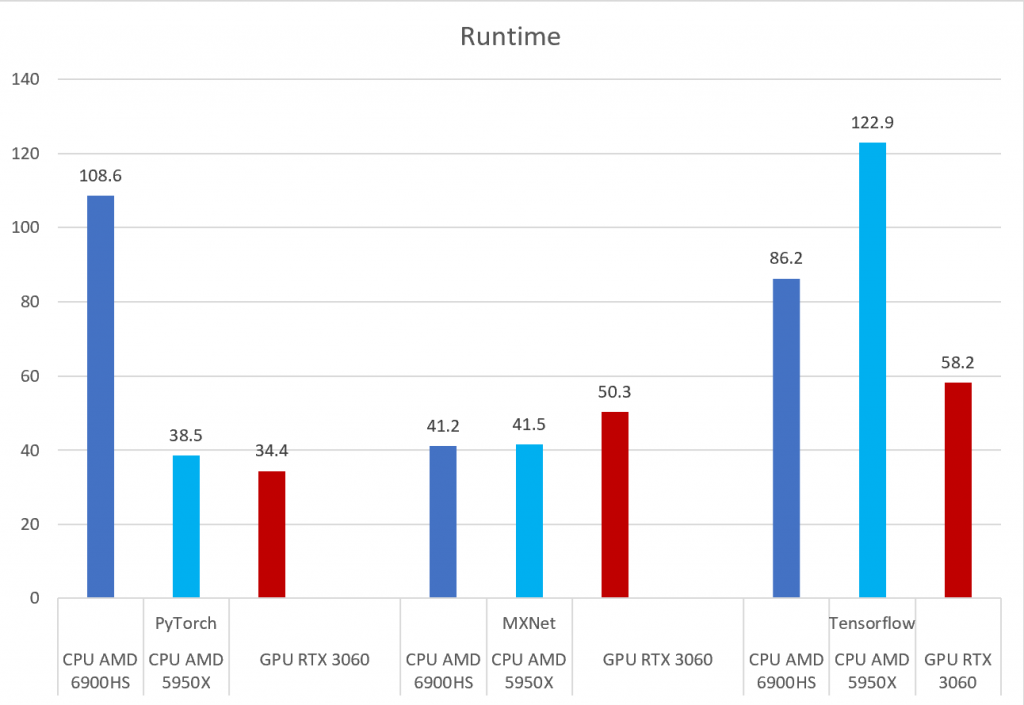

PyTorch, Tensorflow, and MXNet on GPU in the same environment and GPU vs CPU performance – Syllepsis

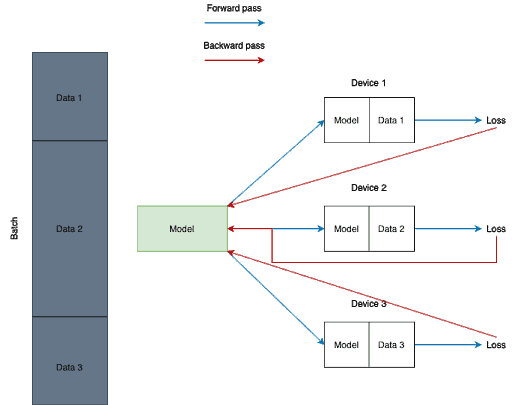

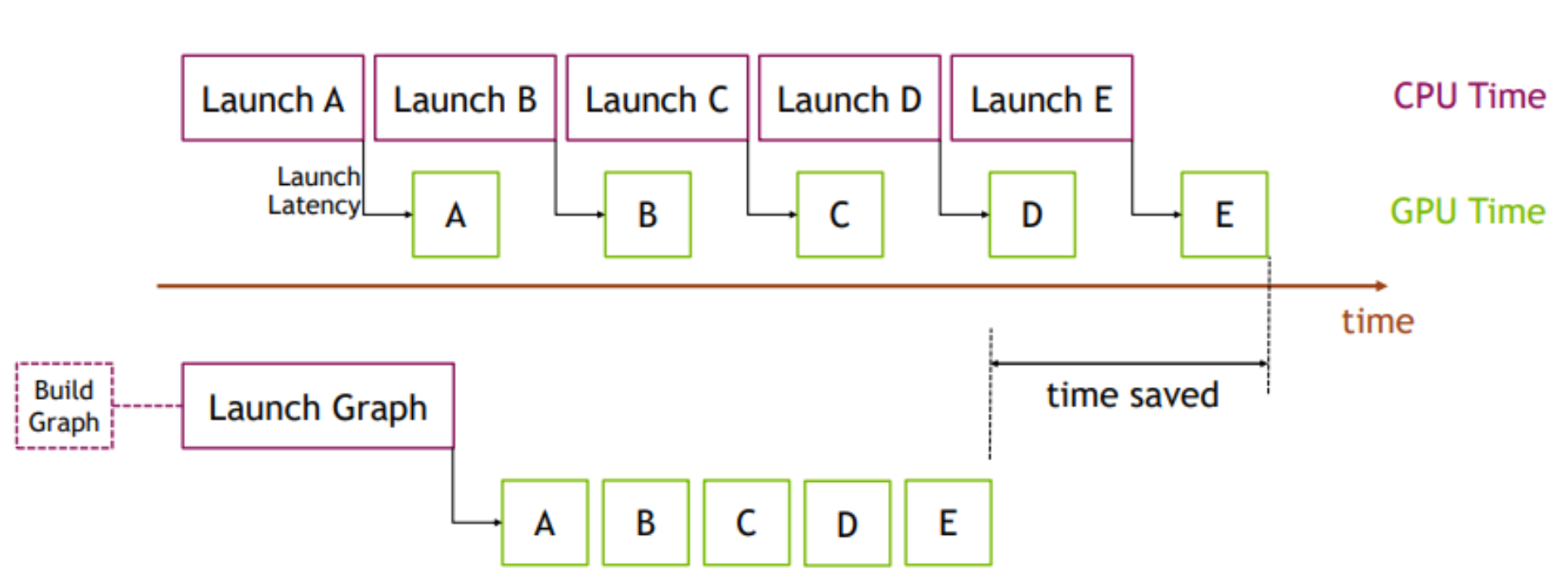

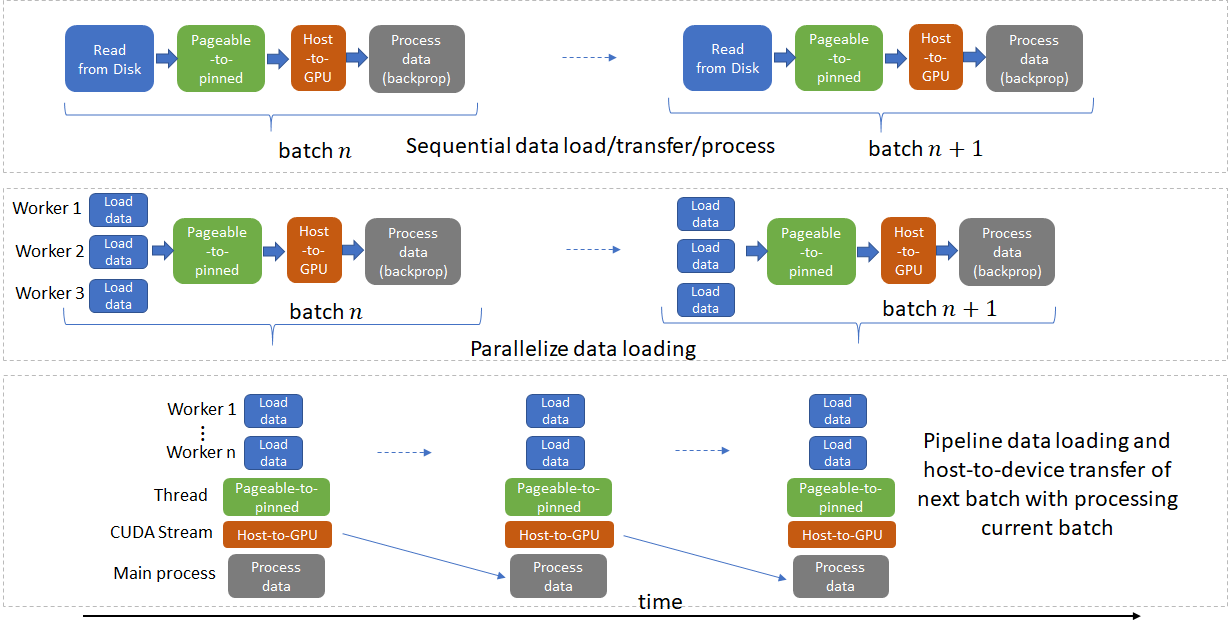

How Pytorch 2.0 Accelerates Deep Learning with Operator Fusion and CPU/GPU Code-Generation | by Shashank Prasanna | Apr, 2023 | Towards Data Science

![D] My experience with running PyTorch on the M1 GPU : r/MachineLearning D] My experience with running PyTorch on the M1 GPU : r/MachineLearning](https://preview.redd.it/p8pbnptklf091.png?width=1035&format=png&auto=webp&s=26bb4a43f433b1cd983bb91c37b601b5b01c0318)